The Future Is Here! And it is here to stay. What we could only imagine some years ago is now part of our reality, and AI has become an ever-present topic. AI is everywhere, from banking and healthcare to transportation and entertainment.

Learning more about the impact of these innovations makes us ask ourselves how this will alter our habits, make our everyday activities less cumbersome and affect our fundamental rights. So, let’s talk about AI’s pros(perity) and cons(istency) in protecting rights.

Pioneering AI. The European Union (“EU” or “Union“) has set the first global comprehensive regulatory framework to apply to anyone who develops, deploys, or uses AI systems in the EU, including entities and individuals from third countries that provide AI products or services in the EU. A new proposal for a regulation (“AI Act“), published by the European Commission (“Commission“), aims to ensure that AI systems in Union are safe and are aligned with fundamental rights and Union values and find methods of effective enforcement. It covers numerous AI technologies, including voice assistants, self-driving cars, chatbots, and military drones. The AI Act should provide legal certainty to facilitate investment and innovation in AI. At the same time, the development of AI in the internal market must not result in its fragmentation.

Handling Risks. The AI Act practically recognizes four categories of AI systems based on their risk level.

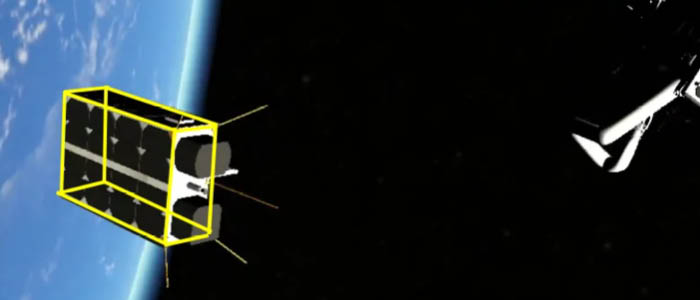

Unacceptable risk in AI. It refers to unethical and harmful practices banned outright, such as sending subliminal messages or exploiting the vulnerabilities of certain groups based on age or any disability. Another example is the social scoring of persons, the evaluation of their trustworthiness based on their social behavior or personality characteristics, and the use of ‘real-time’ remote biometric identification systems in public spaces. The latter may be permitted only exceptionally when searching for victims of crimes, preventing imminent threats, or combating terrorist attacks.

High risk in AI. These practices are directly related to health, safety, and human rights, the list of which is not exhaustive but will be updated by the Commission regularly. AI tools used in infrastructure, law enforcement, transport, medical procedures, recruitment, financial services, and identity verification may be considered high risk. Due to their importance, they entail an abundance of obligations primarily for providers of AI, including ensuring: (i) adequate risk assessment procedures and mitigation systems, (ii) quality training and management systems, (iii) human oversight, (iv) detailed logs of AI-generated data, (v) technical documentation, (vi) clear and adequate information to the user and (vii) conformity of their AI systems with the law. In addition, other participants’ obligations are not circumvented, such as to importers, distributors, or users. Users will, for instance, be bound to careful and diligent following of the instructions of use accompanying these systems.

Limited risk in AI. Designated in the AI Act as practices that require transparency obligations, the idea is to ensure individuals are aware of the use of AI and can make informed decisions. Therefore, providers are bound to inform individuals that they are communicating with an AI, as in the case of chatbots. Additionally, for AI systems that manipulate visual images, audio, or video content that would falsely appear to a person to be authentic or truthful, known as ‘deep fake’ technologies, providers must disclose that the content has been artificially generated or manipulated.

Minimal or no risk in AI. These applications are unlikely to cause harm to individuals or society and, therefore, can be used freely without specific regulatory requirements. The Commission determines the vast majority of currently used AI systems in the EU as such (spam filters, translation software, video games, etc.).

To Comply or Not to Comply? According to the proposal, companies in the worst forms of breach of the AI Act could be fined up to EUR 30 million or 6% of their global turnover, whatever is higher. So, this could amount to many billions in fines for the most prominent players if they violate the rules.

Looking Good? Or Not? Yet, as AI becomes more widespread and advanced, concerns about its possible adverse impacts on society have been raised, such as discrimination, job displacement, and privacy violation.

Despite the evident effort of the Union to address the mechanisms to protect fundamental rights in general, one may imagine situations of collective harm to racial, gender, or ethnic groups. One example of collective harm would be racially biased face recognition systems.

On the other hand, social harm is harm suffered by the general population. Fake news and deep fake are already threats to democracy, and with AI, their impact could reach other levels.

Scaring AI Away? By regulating the field, is the EU becoming less competitive? The EU is often criticized as a heavy regulator, and the EU AI Act may become a part of this perception. AI businesses may be tempted to migrate from the EU to more relaxed jurisdictions to develop their AI products/services more comfortably. Aiming to regulate, the EU could lose out on the AI boom and continue to lag behind other competing markets.

By Ivana Stojanović Raisic, Counsel, Nemanja Sladakovic, Senior Associate, Bojan Tutic, Associate, Vasilije Boskovic, Associate, Gecic Law